How we make sure we’re using the best of the best, as the generative AI landscape quickly evolves

When we began building CoCounsel in September of 2022, we were among the first companies to access and build on OpenAI’s not-yet-released LLM, GPT-4, the largest and most powerful generative AI model ever created. Since then practically every week a new LLM is released, some of the most notable being Google’s Bard and PaLM, Microsoft’s Orca and Meta’s Llama. And the most recent was GPT-4 Turbo, the new model behind ChatGPT.

Every time a new model or capability (such as fine-tuning) becomes available, our team evaluates it to ensure we’re delivering the best possible experience with CoCounsel, based on the available technology. For instance, one theory about how to get the best results from a generative AI product is to fine-tune a host of smaller LLMs for specific tasks, which could perform as well as or better than GPT-4 on its own, depending on how difficult any given task is. We tested this theory extensively, and found that we were unable to fine-tune smaller models to perform as well as GPT-4 for CoCounsel use cases. While in some cases they did well on extremely narrow tasks, that’s not useful for a product (CoCounsel) designed to deliver broadly applicable skills for legal professionals, e.g., to be able to summarize every kind of document imaginable.

But of course testing takes time and resources, so we also need to complete this work efficiently. And evaluation for expert domains such as medicine and law is particularly expensive. So how do we conduct a trustworthy and thorough evaluation while minimizing human labor? How do we decide how much effort is worthwhile, given a particular model’s promise? And how do we decide whether to use any of the new or updated models we test?

Methodology matters

We’ve developed a three-stage evaluation pipeline, minimizing human effort and thereby making it possible for us to effectively and efficiently examine new LLMs. Each stage is more labor intensive than the one prior, and an LLM under review only moves to the next stage of evaluation if it “passes” the one prior. This method has allowed us to stay on top of all the major new LLMs and LLM capabilities that are released (and keep tabs on the thousands of smaller models), even with a small team.

Stage 1: Automated public benchmarks

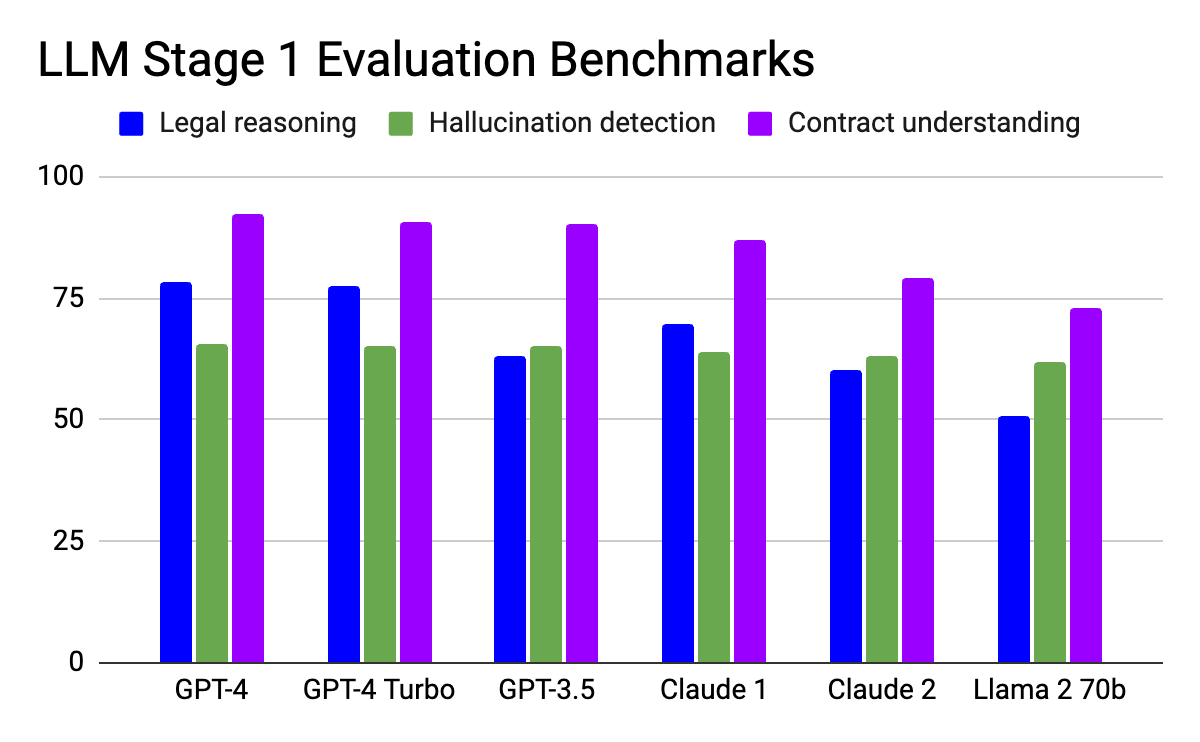

The goal of stage 1 is to filter out the LLMs that aren’t even worth testing in depth, using automatic evaluation with public benchmarks, or open-access rubrics. At this stage we’re measuring basic capabilities including legal reasoning (for which we use LegalBench, the LSAT, the bar exam, and similar tests), hallucination detection (CoGenSumm, XSumFaith), and contract understanding (CUAD, MAUD). We also perform custom tests for other capabilities we care about, such as retrieval-augmented generation (or RAG), page number extraction, formatting, and faithfulness to source documentation.

At this stage we’re looking simply for rough baseline capability, without performing any prompt engineering. Some datasets, such as LegalBench, come with preset prompts, but for everything else we use simple generic prompts (e.g., “Read the passage and choose A, B, C, or D”). In total we perform more than 10,000 prompt-response pairs with ground truth across a wide category of tests.

Stage 2: Task-specific semi-automated evaluation

For LLMs that “pass” stage 1, we take a second look at them, fully exploring the potential of in-depth prompt engineering, specialized prompting paradigms such as REACT, and fine-tuning, when it’s available. Concurrently we begin applying the models to relevant use cases, such as summarizing contracts or extracting useful passages for legal research.

An important consideration in this stage is if, when, and how to use GPT-4 to score results, which would save a considerable amount of time and resources. After all, a considerable amount of research in the LLM space inherently trusts GPT-4 to evaluate the outputs of smaller LLMs reliably and accurately. So we explored this in depth and identified two very different setups for GPT-4-based evaluation.

In the first, task-level evaluation, we broadly define success criteria across an entire task (e.g., a legal research memo should be succinct, responsive to the user query, and free of hallucinations) and ask GPT-4 to evaluate the quality of responses based on that criteria. In the second, sample-level evaluation, a human expert first defines success criteria for each individual sample in a test (e.g., “For this particular query, the answer should mention X and Y and not mention Z”), and then we use GPT-4 to ensure that each response matches its associated human-defined criteria.

We’ve found sample-level evaluation to be much more robust and reliable across different tasks. While a human is still involved in initially defining success criteria for each individual sample, once the criteria has been defined, it can be reused in an automated fashion for all future evaluations, saving considerable time and work when we test new LLMs and LLM-related methodologies.

We’ve built a massive suite of automated tests like this over time, for all the tasks and use cases we want CoCounsel to be able to handle. Then once the tests are written, they can be automated and applied over and over again, without further human labor.

Stage 3: Human evaluation

The goal of this stage is to ensure that before we deploy to production, the LLM under evaluation doesn’t break the system or fail on important use cases or even edge cases. For this we generally perform an A/B-style test between our production model and the new model, using a suite of the most important use cases and edge cases. Then we ask our Trust Team subject matter experts to compare the final outputs from the two models on a Likert scale. This stage is critical for quality control, but is incredibly labor intensive, so it wouldn’t make sense for us to spend time testing new LLMs, prompting paradigms, or training methods that definitely would never pass, which is why we weed them out earlier.

Ultimately, for high-stakes LLM applications there are ways to use automated and semi-automated methods to reduce the burden on human evaluators and annotators. But human experts are still needed in the evaluation pipeline to build tests and ensure the reliability of the final product, meaning that currently for expert domains such as legal, AI is augmenting human labor rather than replacing it altogether.

Adapted from a keynote talk given by Casetext senior machine learning researcher Shang Gao at the 3rd International Workshop on Mining and Learning in the Legal Domain (MLLD-2023).