Image: Holley Robinson, EDRM.

Image: Holley Robinson, EDRM.

What can we do to address the challenge of Deepfakes being presented as relevant and authentic evidence in the justice system?

Deepfake technology poses a serious challenge to establishing and rebutting the authenticity of digital exhibits in legal proceedings. The rapid advancement of generative AI technology enables the creation and proliferation of high-quality deepfake evidence quickly and at minimal or no cost. Whether you are a lawyer, judge, legal practitioner, or jury member – you can no longer fully trust your eyes and ears to distinguish between authentic and Deepfake Legal Evidence.

With a 900% year-to-year increase, Deepfakes are already impacting the business world, Pop culture, elections campaigns, and even national security. While the creative opportunities excite AI enthusiasts, the new technologies present serious misinformation concerns. In a world where truth itself is becoming elusive, the erosion of trust in digital media has profound implications for the integrity of our justice system, where the credibility of evidence is paramount. Who wants to rely on false digital legal evidence when forming a legal strategy for litigation? to present Deepfake Legal Evidence in a court hearing? And worst of all, who wants to deprive someone’s freedom based on an audio or video file that is AI-manipulated?

To meet baseline duties of competence, lawyers must be prepared to detect and address deepfakes, to support a claim that audio or video evidence is fake, or to prove audio and video evidence is authentic. While the AI v. AI arms race on deepfakes technology intensifies, it is also being injected into the legal system. This state of play intrigues a trilateral collaboration between lawyers, AI deepfake detection companies, and forensic experts that collectively play a crucial role in either authenticating or proving that digital legal evidence is fake. Courts have already squarely rejected efforts to claim video evidence is a deepfake that were not supported by expert testimony; bare assertions that although an individual’s voice or face looks and sounds exactly like their client, it is not their client, do not hold up in court.

The trilateral collaboration between lawyers, AI deepfake detection companies, and forensic experts will also face new hurdles under the amended Federal Rule of Evidence 702, including the new explicit burden of proof and the amendment’s emphasis on the court’s “essential” gatekeeping role deciding threshold questions of reliability and admissibility of expert testimony.

The Marked Increase of Discoverable Audio & Video Evidence

The advancement of deepfake technology coincides with an emerging shift in eDiscovery data sources, leading to a significant increase in discoverable audio and video evidence. “Litigation used to focus more on documents; now, it involves a substantial volume of data impacted by how people communicate,” said Chris Haley, VP of Practice Empowerment aiR at Relativity. “Over the last several years, there has been a transition from traditional office meetings to video conferences that create audio and video recording, and there has been a continued increase in audio and video evidence in general. Statistics presented at Relativity Fest conference in London (2024) indicate a 40% year-over-year growth in audio files and a 130% growth in video files on the Relativity platform. These trends are growing, as is the challenge of deepfakes in digital legal evidence. Both require equal attention, and the time to prepare for these challenges is now.”

Deepfakes are Already in Courts

There is nothing novel about fake evidence in litigation, and courts have repeatedly dealt with fake text messages, emails, chats, documents, and the like. Given how rapidly deepfake technology is advancing and how pervasively they are being injected into our day-to-day lives and into our emerging data sources in eDiscovery, it is unsurprising that claims of deepfakes evidence are already surfacing in U.S. courts. Legal cases emerging include U.S. v. Guy Wesley Reffitt, U.S. v. Anthony Williams, U.S. v. Joshua Christopher Doolin, and Sz Huang et al. v. Tesla, Inc. et.

These cases exemplify how parties to a dispute are already filing motions in limine challenging the authenticity of certain videos or audio at trial, arguing the media is a Deepfake. On the flip side, in a criminal case in Pennsylvania, when a prosecutor claimed that a video was a fake that was created to victimize a teen cheerleader, the defendant used expert testimony to prove it was authentic (That was the case of Commonwealth of Pennsylvania v. Spone). According to iDS CEO Dan Regard, “We work on many cases where evidence is suspect. We show that evidence is valid as often as we show that it is invalid. The trend is in the marked increased scrutiny, especially of key evidence. As a result, we now have methods for flagging and triaging evidence for validity checks.” Recognizing the problem is a wonderful start. What’s next?

Deepfake Detection in the EDRM

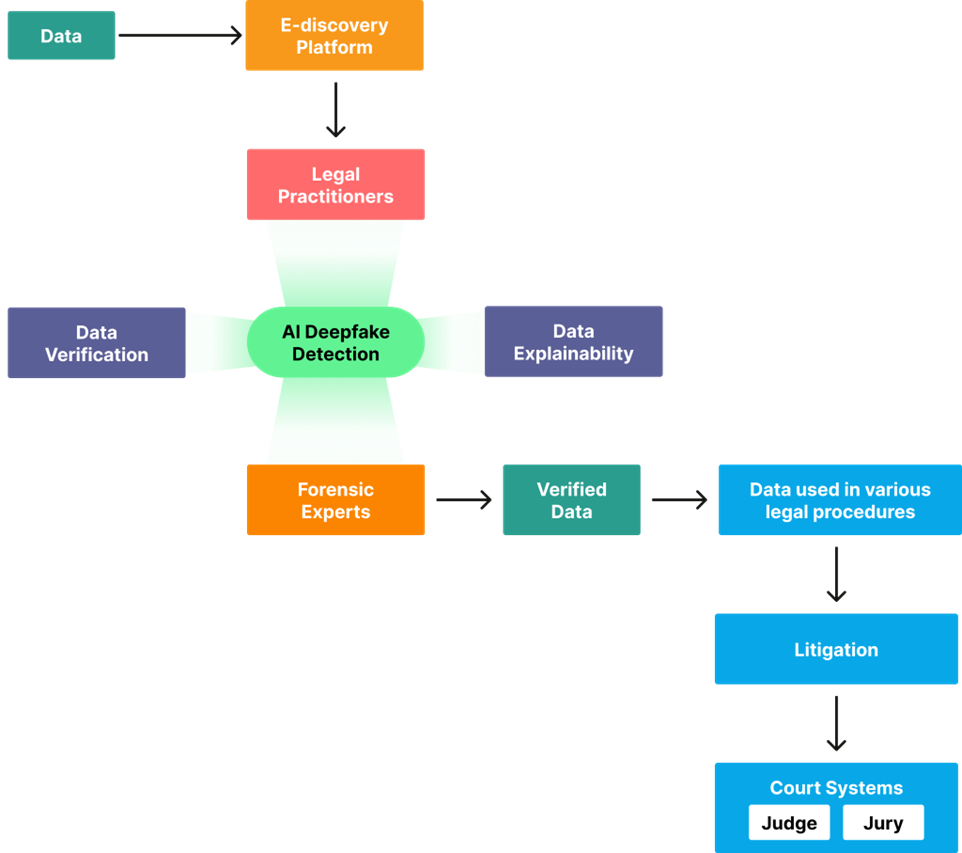

A working group composed of eDiscovery thought-leaders, an AI deepfake detection company, Clarity, and forensic experts has been exploring ways to help the legal community shift the discussion on deepfakes in the justice system from the problem space to a solution-based approach. This effort involves two key steps: first, investigating the EDRM to identify the stages where deepfake legal evidence is likely to be present, and second, highlighting the stakeholders involved at each stage of the EDRM model who can help detect Deepfake Legal Evidence. The findings of this work led to the creation of a new diagram: Deepfake Detection in the eDiscovery Reference Model.

This model helps the eDiscovery community understand three crucial aspects: where to look for deepfakes, who should look for them, and, when relevant, where they can locate themselves on the diagram and collaborate to address this challenge.

Identification and Collection Stages

In line with the EDRM Identification and Collection standards, Deepfakes can emerge during the early stages of the EDRM, which are critical for determining potential sources of ESI and assessing the scope, breadth, and depth of audio and video files. During these stages, deepfakes can surface in video and audio files while collecting and authenticating unstructured data from collaboration tools, like Teams, Meet, and Zoom, from other business applications (like store-level security video, videos uploaded to a recruiting site, training videos, etc.) from cell phones and tablets, and web sources like social media and professional networking sites. The main stakeholders that encounter deepfakes during these stages are forensic experts, internal IT departments and external vendors. “Deepfake poses a significant challenge for evidence authentication in these early phases,” says Jerry Bui, a Manager Director at FTI Consulting. “The burden of verifying the veracity of multimedia files is becoming increasingly complex, and the traditional investigation methods of visual and audio analysis are no longer sufficient to identify AI-manipulated content.” According to Jerry Bui, “Forensic experts need to leverage advanced deepfake detection technologies for forensic analysis, keep pace with the state-of-the-art synthetic media creation technologies, and utilize AI detection technologies and contemporary research to adapt to the changing landscape of deepfakes generation and analysis.”

Review and Analysis Stages

In the Identification and Collection stages, ESI has been flagged as potentially being a Deepfake. In the Review and Analysis stages, legal teams need to assess ESI not only for authenticity purposes but also for relevance, content, and context. The main stakeholders at this stage are in-house and outside counsel, legal practitioners, external review vendors, forensic experts, and eDiscovery platforms. According to Stephen Dooley, the Director of Electronic Discovery and Litigation Support at Sullivan & Cromwell, “Nowadays, the task of verifying the veracity of digital legal evidence in the age of Deepfakes is easier said than done. The prevalence of high-quality synthetic content and the increasing volume of unstructured data make it harder for eDiscovery experts to distinguish between genuine and synthetic content when reviewing and analyzing files. Looking forward, the use of innovative technology to classify and flag AI contents along with a legal framework can assist in adapting to challenges to safeguard IP, protect privacy, mitigate cybersecurity events, and provide better clarity throughout the EDRM process.” More intricate and varied collaboration platforms, mobile devices, and virtual work continue to drive an uptick in data volumes and velocity, and deepfake contents are putting pressure on teams to manage and proactively reduce it before running it through costly review or before relying on it to form case strategy or business decisions in an investigation or lawsuit.

Production and Presentation Stages

Deepfake legal evidence may also present itself in the final stages of the EDRM when legal teams are producing and delivering ESI to others or even when displaying ESI in depositions, court hearings, and trials. Stakeholders who encounter deepfakes at this stage are mostly litigation teams, Judges, juries, and expert witnesses. According to U.S. District Judge Xavier Rodriguez (W.D. Texas), deepfake legal evidence could recast the justice system. “Even in cases that do not involve fake videos, the very existence of deepfakes will complicate the task of evaluating real evidence. If juries start to doubt that it is possible to know what is real, their skepticism could undermine the justice system as a whole.” Considering these circumstances, significant costs will be expended in proving or disproving the authenticity of the exhibit through expert testimony, and trial judges will have to navigate what may be outdated rules of evidence to determine that only sufficiently authentic, valid, and reliable evidence is admitted” This also ties back to the Identification and Collection and Review and Analysis phases, as any technology being used to identify, review and analyze deepfake legal evidence must be reliable.

A Strategic Framework for Collaborative Solutions

The current reality casts a shadow of uncertainty over the foundations of truth as we know it – which is not sustainable. What can we do to restore equilibrium in our judicial system?

AI Technology adoption: A recent report by Relativity and FTI suggests that legal practitioners are cautiously optimistic about how advancing technology will help them manage demanding workloads and improve their response to digital risks in eDiscovery. The report suggests that in 2024, many legal practitioners are more than ready to embrace AI solutions to address their data challenges in eDiscovery. This is an essential shift in mindset for the legal industry, which paves the way for increased adoption of AI and generative technology in the coming months and years.

Collaboration: Technology alone cannot solve the deepfakes challenge. “There is a need to move from silos to synergy and build a coherent strategy that will leverage technology in a collaborative way,” says Kaylee Walstad,chief strategy officer of EDRM, and Gil Avriel, co-founder and chief strategy officer of Clarity, an AI cybersecurity company that offers AI deepfakes detection solutions over the Relativity platform. “Thanks to the Deepfake Detection in the EDRM, we can now develop an AI collaborative framework that will help experts do their work better. There is nothing more exciting and optimistic than humans working together in synergy to solve wicked problems caused by machines.”

“The growing willingness to adopt AI Technologies and the need to work together can mobilize different stakeholders to implement successful human-AI collaborations across disciplines that leverage technology collaboratively,” says Kaylee Walstad and Gil Avriel.

Authenticity and Explainability of Deepfakes: eDiscovery platforms can play a leading role in fostering collaboration by connecting legal practitioners and third-party deepfake detection solutions on their platforms. This will enable legal practitioners access to the state-of-the-art solutions they need to run deepfake detection models at scale over eDiscovery data and verify the authenticity of digital legal evidence.

Deepfakes detection technology helps lawyers identify a problem with the authenticity of a video or a shred of audio evidence. Yet, as the case progresses, there is a need to explain why there is a problem of authenticity with specific evidence. A high level of explainability should be based on the reliable application of the principles and methods to the facts of the case. Considering that explainability and the level of trust in AI models are closely intertwined, how can the legal community improve deepfake detection explainability in courts and other legal procedures?

Leverage AI Detection technology for better reliability and explainability by forensic experts: A recent report by Relativity and FTI indicates that most legal practitioners follow the “Trust, but Verify” approach for AI adoption. As contemporary data suggests, the comfort level of lawyers in AI is based on the assumption that AI use cases would maintain a healthy degree of human oversight, and they are also more likely to trust the use of AI by someone who is more seasoned and has the experience to know whether it is a legitimate response.

As part of the collaboration framework, deepfake detection companies should collaborate with forensic experts and expert witnesses while engaging in the preservation, investigation, and testimony of digital forensics. They should all leverage AI detection technology to explain in layman’s terms the work of the AI machines and better articulate to judges and juries how they verified the final output for accuracy. Based on that collaboration, counsels will then incorporate the work product from the AI deepfake detection technology and the forensic expert into compelling legal arguments. Such collaboration also provides the basis to meet the heightened requirements of amended Fed.R.Civ.P.702 to help the court meet its “gatekeeping” function to ensure fair results in the justice system.

A Framework for Collaborative Solutions:

Chart 2

Chart 2

A framework for collaborative solutions.

The Path to Solutions

The new Deepfake Detection in the EDRM is a call for action. Deepfakes in the justice system are becoming a pressing challenge, and we have no time and no choice but to deal with it seriously, responsibly, and systematically. We, the people of law, AI technology, and forensic experts, must collaborate. We have only one justice system, and truth matters. The new Deepfake Detection in the EDRM and the proposed framework for the collaborative technological solution will be presented at the Relativity Fest Annual Conference (Chicago, September 25th-27th) in a panel titled “Deepfakes in eDiscovery: A Joint Framework for Solutions.

Read the original release here.