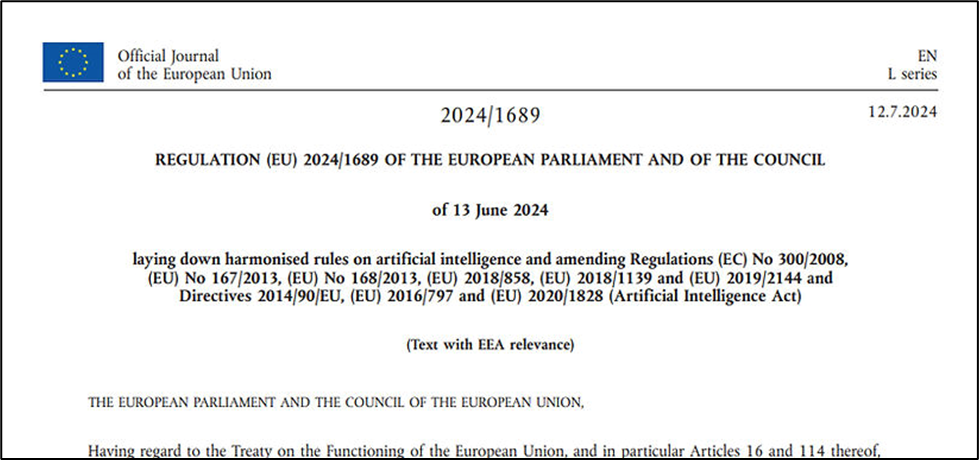

This week the EU regulation 2024/1689, “laying down harmonised rules on artificial intelligence” became effective. The European Artificial Intelligence Act will regulate the use of artificial intelligence with an aim towards protecting the rights and the safety of EU citizens.

The regulation does not seek to restrict spam or AI that suggests products to consumers. It will require chatbots to disclose to people who they are communicating with that they are in fact AI and not a human being. Generative AI, such images or video created by AI, will need to be flagged as content created by artificial intelligence.

Paragraph 30 of EU regulation 2024/1689 forbids using biometric data to predict a person’s sexual orientation, religion, race, sexual behavior, or political opinions, although it provides for an exception for filtering through biometric data to comply with other EU and member nation laws – specifically noting that police forces can sort images by hair or eye color to identify suspects.

Paragraph 31 addresses the prohibition of social scoring systems, which use AI to evaluate the trustworthiness of an individual:

AI systems providing social scoring of natural persons by public or private actors may lead to discriminatory outcomes and the exclusion of certain groups. They may violate the right to dignity and non-discrimination and the values of equality and justice. Such AI systems evaluate or classify natural persons or groups thereof on the basis of multiple data points related to their social behaviour in multiple contexts or known, inferred or predicted personal or personality characteristics over certain periods of time. The social score obtained from such AI systems may lead to the detrimental or unfavourable treatment of natural persons or whole groups thereof in social contexts, which are unrelated to the context in which the data was originally generated or collected or to a detrimental treatment that is disproportionate or unjustified to the gravity of their social behaviour. AI systems entailing such unacceptable scoring practices and leading to such detrimental or unfavourable outcomes should therefore be prohibited. That prohibition should not affect lawful evaluation practices of natural persons that are carried out for a specific purpose in accordance with Union and national law.

The People’s Republic of China’s Social Credit System helps to put individual debtors on blacklists, but is usually used to enforce regulations against companies.

The Act requires AI systems used for healthcare or employee recruitment to be monitored by humans, and ensure that they use high quality data. High risk AI systems will need to be registered in a database maintained by the EU and receive a declaration of conformity.

High risk AI systems will have to have a CE marking (physical or digital) to show that they conform with the Act. A CE ‘conformité européenne’ marking looks like this:

. . . it is used widely to show that the product conforms with health and safety regulations.

AI developers will not have to fully comply with the EU AI Act until August 2, 2027.

It’s not only becoming common for law firms to submit briefs to courts which they drafted with the assistance of artificial intelligence software, courts are catching them in the act and finding that some of the caselaw cited to in these briefs is completely fictional. AI ‘hallucinations’ are instances where AI software generates fallacious information in response to a question.

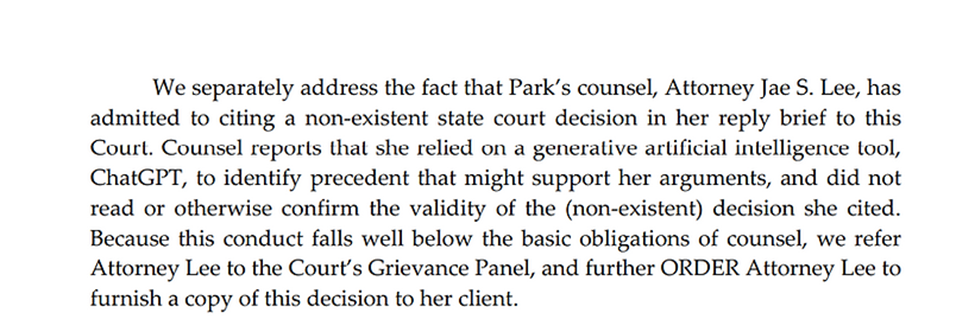

In January, the Second Circuit issued a per curiam decision, Op., Park v. Kim, No. 22-2057 (2d Cir. Jan. 30, 2024), ECF No. 178-1, in which the court found that:

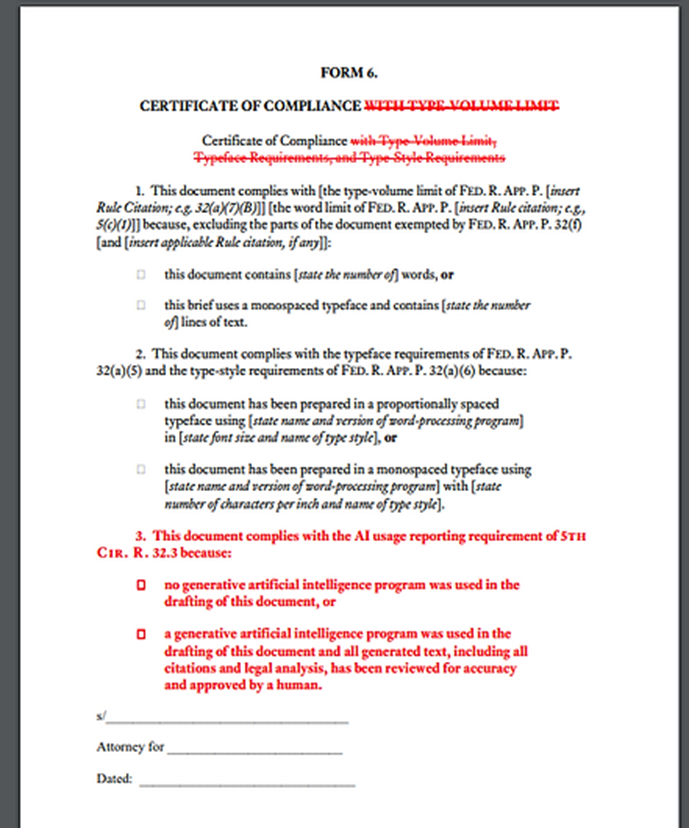

The attorney who filed the brief was referred to the Court’s Grievance Panel. The Court cited the 5th Circuit’s amendment to its rules which requires that attorneys certify that no generative artificial intelligence was used for a filing, or that at least that the filing was reviewed for accuracy by a human.

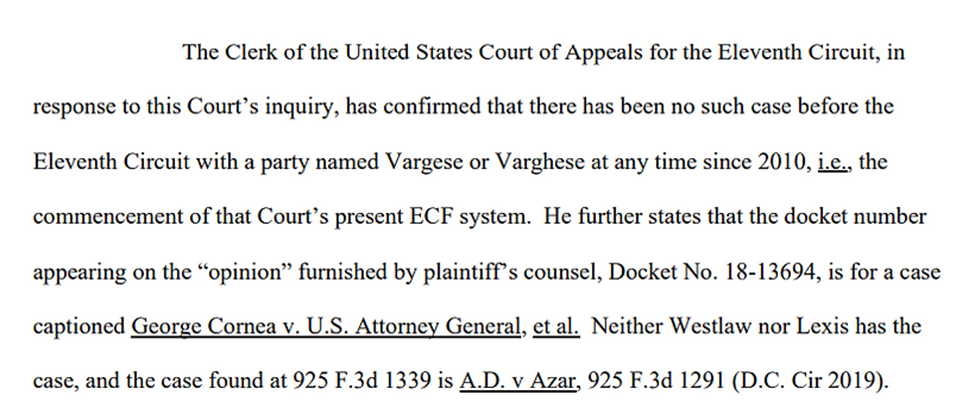

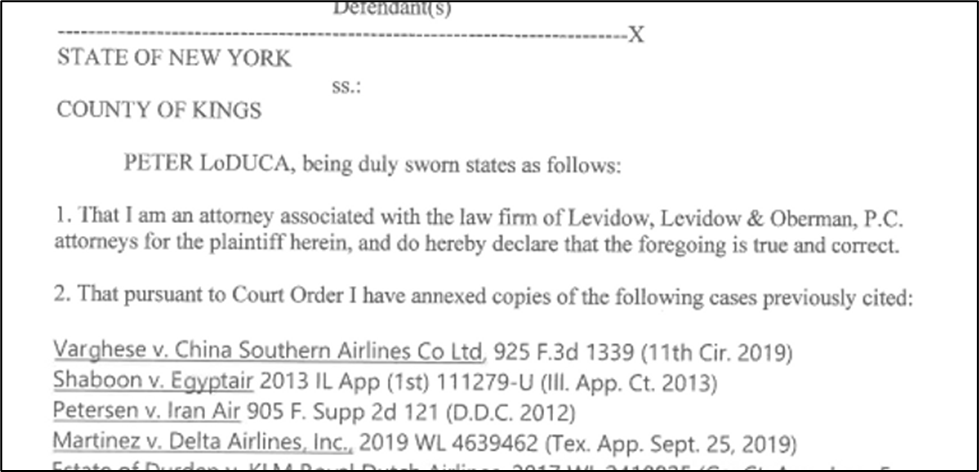

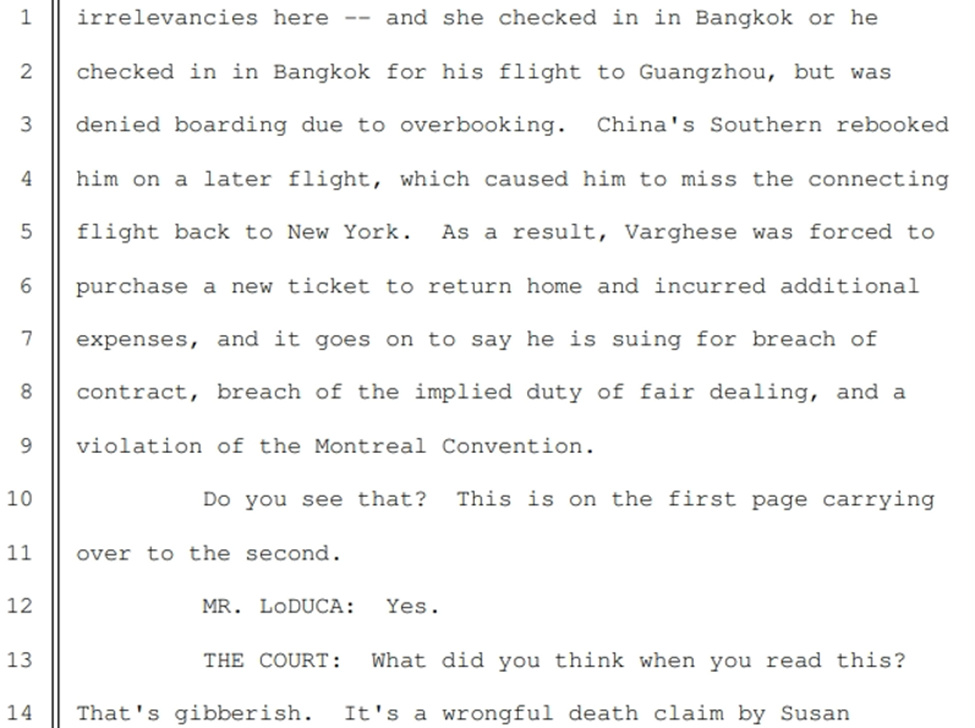

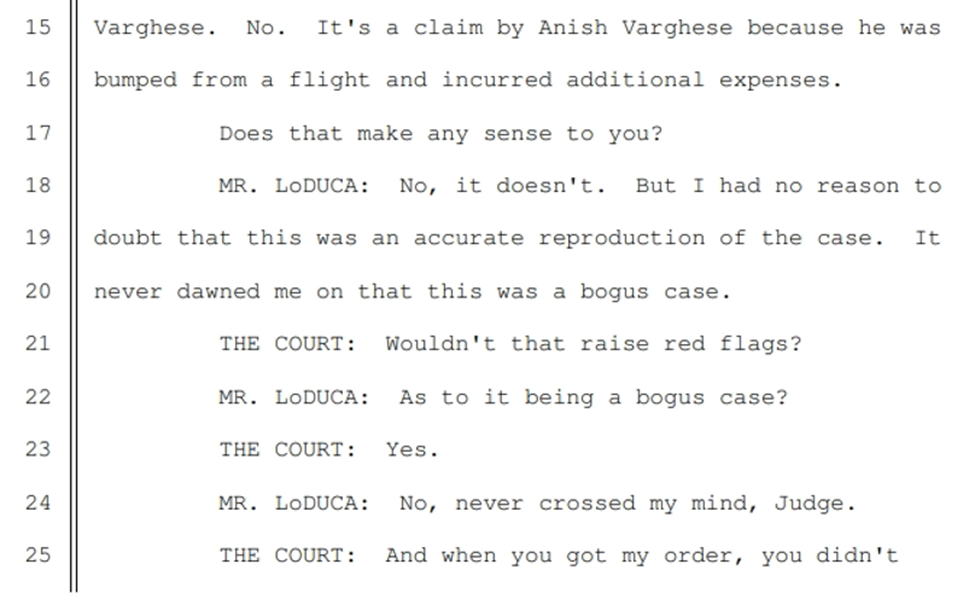

In May 2023, Judge Castel of the United States District Court for the Southern District of New York, issued an Order to Show Cause why the plaintiff’s counsel should not be sanctioned pursuant to Fed. R. Civ. P. 11 and the Court’s inherent authority for submitting an affirmation in opposition to a motion to dismiss which included citations to six “bogus judicial decisions with bogus quotes and bogus internal citations.” Order to Show Cause at 1, Mata v. Avianca, Inc., No. 22-cv-1461 (PKC) (S.D.N.Y. May 4, 2023), ECF No. 31. The brief used citations to reporters which actually refer to other cases:

Id. at 2.

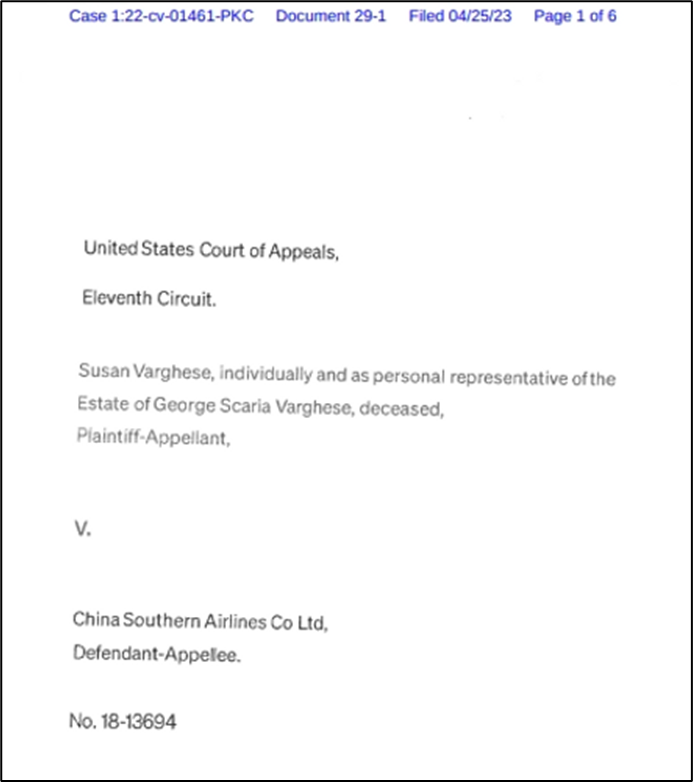

Not only did the plaintiff’s affirmation refer to Varghese v China South Airlines Ltd., 925 F.3d 1339 (11th Cir. 2019), and other cases which are completely made up, but when the court issued an order asking that plaintiff’s counsel submit copies of these cases (Order, Mata v. Avianca, Inc., No. 22-cv-1461 (PKC) (S.D.N.Y. Apr. 11, 2023), ECF No. 25) the counsel in turn filed an affidavit submitting AI generated copies of the imaginary cases! Affidavit, Mata v. Avianca, Inc., No. 22-cv-1461 (PKC) (S.D.N.Y. Apr. 25, 2023), ECF No. 29.

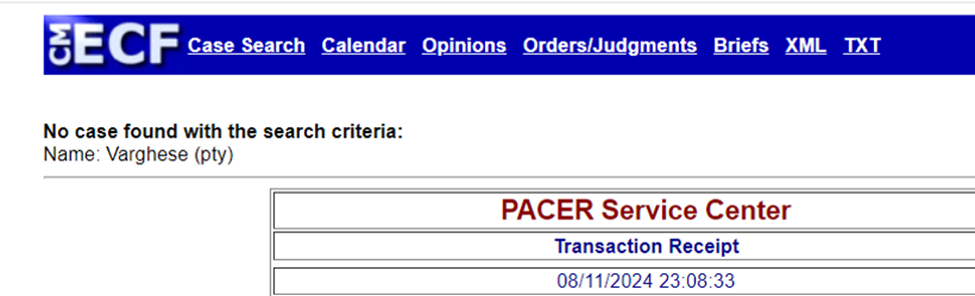

A copy of Varghese was filed on PACER, but it’s something that AI simply invented:

PACER doesn’t lie!

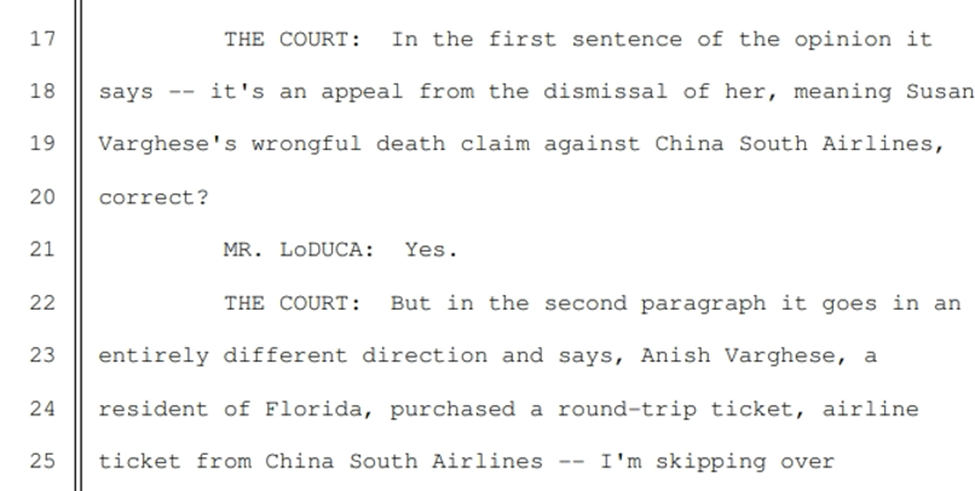

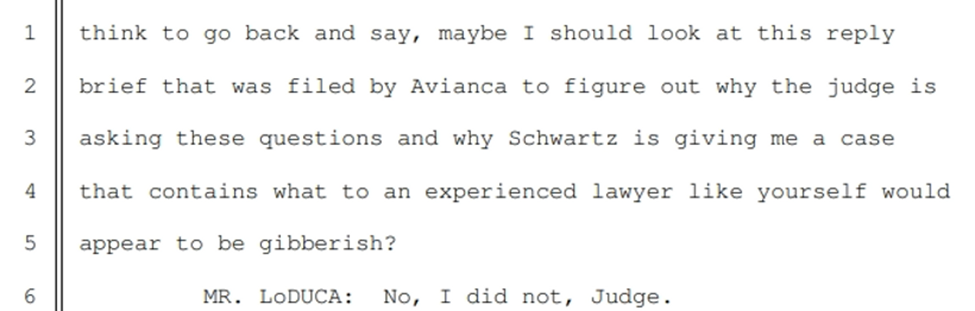

At a subsequent hearing, Judge Castel excoriated the plaintiff’s attorney for submitting the fictitious case:

Hr’g Tr. at 15:17-17:6, Mata v. Avianca, Inc., No. 22-cv-1461 (PKC) (S.D.N.Y. Apr. 11, 2023), ECF No. 52. So the case that ChatGPT invented was not even one which had an internal logic of its own.

The court sanctioned the plaintiff’s attorney under Rule 11, fined him $5,000 and ordered him to send a letter to each judge listed as the author of the false cases the affirmation cited to. Mata has been dismissed for being untimely under an international convention that covered a claim for an injury suffered by the plaintiff during an international flight.

This night’s post shows how you can use a PowerShell script to save each page of an online book as a PDF.

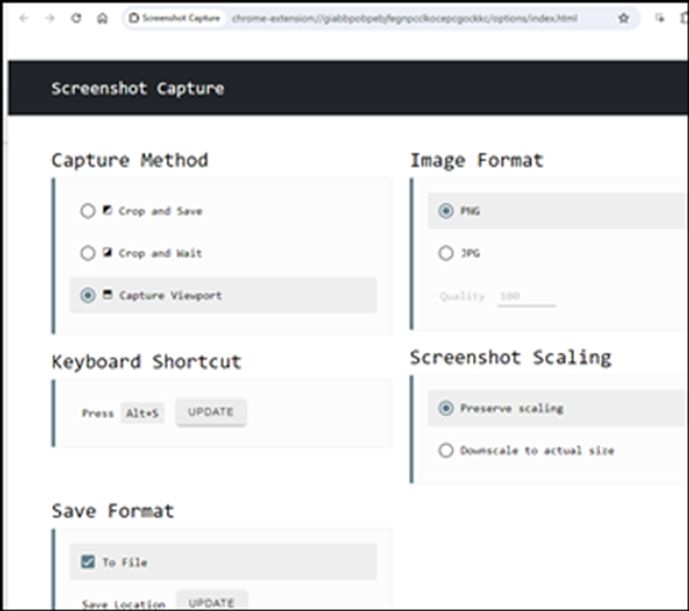

The process involves using an add-in for Chrome called Screenshot Capture. See: https://chromewebstore.google.com/detail/screenshot-capture/giabbpobpebjfegnpcclkocepcgockkc

When this is installed, when you press ALT + S it will automatically save a .png file of the open page to a specified location. Use these settings, with the option for ‘Capture Viewport’.

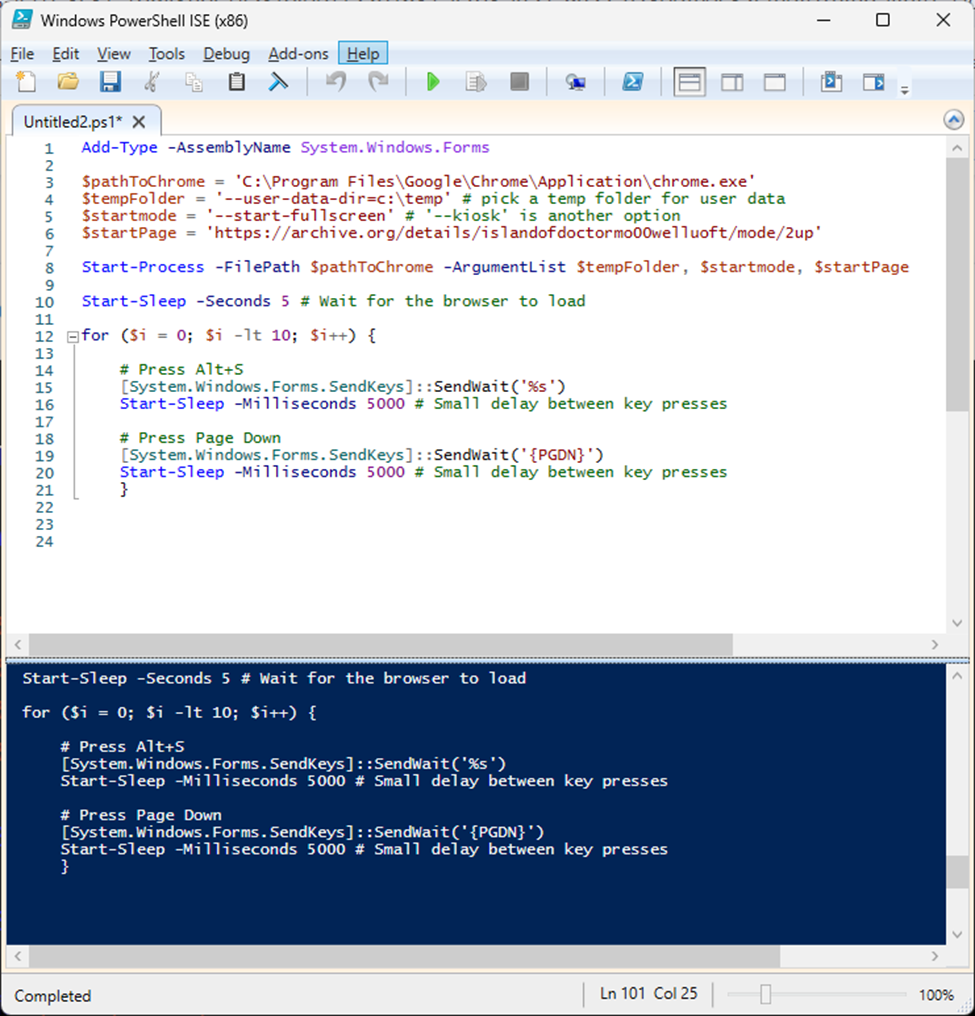

This is the script:

Add-Type -AssemblyName System.Windows.Forms

$pathToChrome = ‘C:\Program Files\Google\Chrome\Application\chrome.exe’

$tempFolder = ‘–user-data-dir=c:\temp’ # pick a temp folder for user data

$startmode = ‘–start-fullscreen’ # ‘–kiosk’ is another option

$startPage = ‘https://archive.org/details/islandofdoctormo00welluoft/mode/2up‘

Start-Process -FilePath $pathToChrome -ArgumentList $tempFolder, $startmode, $startPage

Start-Sleep -Seconds 5 # Wait for the browser to load

for ($i = 0; $i -lt 10; $i++) {

# Press Alt+S

[System.Windows.Forms.SendKeys]::SendWait(‘%s’)

Start-Sleep -Milliseconds 5000 # Small delay between key presses

# Press Page Down

[System.Windows.Forms.SendKeys]::SendWait(‘{PGDN}’)

Start-Sleep -Milliseconds 5000 # Small delay between key presses

}

Be sure to confirm that the path on the second line is where you have Chrome installed on your PC.

Enter the url of the web page on the line beginning, ‘$startPage’

Indicate the number of pages you need to grab here [in this example it’s 10 pages]

for ($i = 0; $i -lt 10; $i++) {

The PowerShell script in effect presses ‘ALT + S’ and then the page down key for you.

It’s possible that the script can get thrown off if a page takes a long time to load, so the last part of the script pauses for a set amount of milliseconds. So 5000 equals 5 seconds.

In Chrome, be sure to enter full screen mode by pressing F11.

Just enter the script in the white pane at the top, and then press the play button.

After the script ends and the .png files are generated, you can easily convert them to a single PDF in Adobe Acrobat.

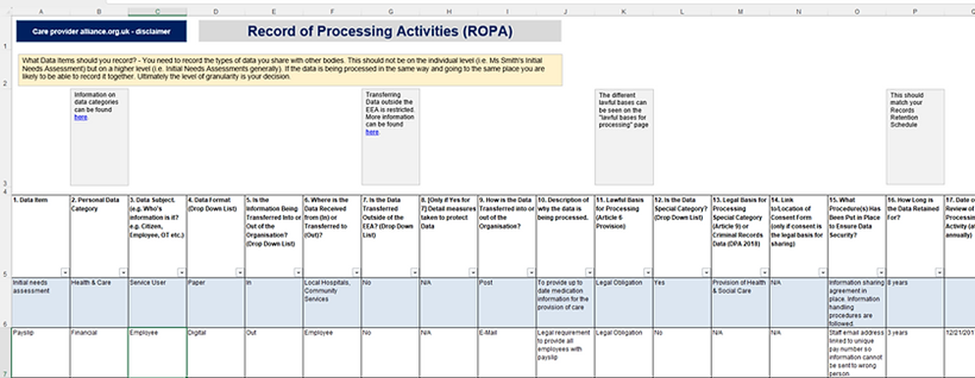

Article 30 of the General Data Protection Regulation requires controllers of personal data to maintain a ‘record of processing activities’ which includes seven key pieces of information:

- Controller name and contact information.

- Purpose for the processing.

- The categories of personal data and data subjects.

- The categories of recipients to whom data will be disclosed.

- Transfers of data to third countries or international organizations.

- The time range for which the data will be held and then erased.

- The security measures taken to protect the data.

You can find a good example of a spreadsheet used to track ROPA data on the site of the UK’s National Health Service.

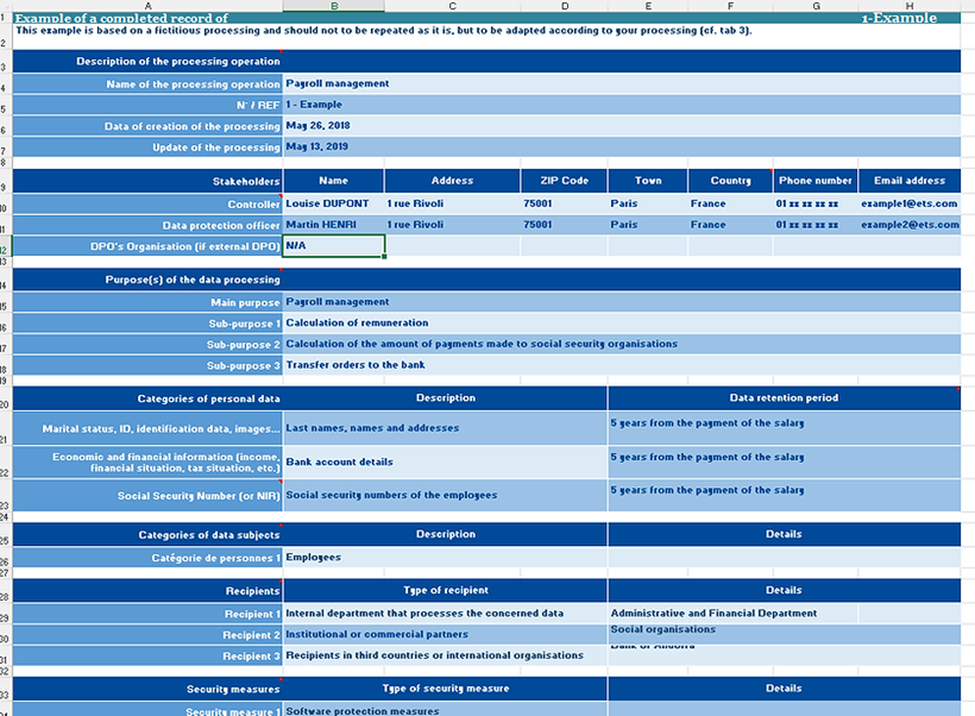

Compare this with an example on the site of the Commission nationale de l’informatique et des libertés (CNIL), the French agency charged with enforcing data privacy laws.

Supporting documentation is often required for ROPAs, such as vendor DPAs, (Data Processing Agreements) which address the terms under which a service provider processes personal data for a company, and DSAR responses (Data Subject Access Requests), which are actions taken to remove, alter, or access personal data on the request of the person whose data is involved.

Organizations often prepare data maps to track the personal data they are holding. Some service providers such as BigID have developed systems which help companies assess private data on their network.

[View source.]